Here at OrionX.net, we are fortunate to work with tech leaders across several industries and geographies, serving markets in Mobile, Social, Cloud, and Big Data (including Analytics, Cognitive Computing, IoT, Machine Learning, Semantic Web, etc.), and focused on pretty much every part of the “stack”, from chips to apps and everything in between. Doing this for several years has given us a privileged perspective. We spent some time to discuss what we are seeing and to capture some of the trends in this blog: our 2016 technology issues and predictions. We cut it at 17 but we hope it’s a quick read that you find worthwhile. Let us know if you’d like to discuss any of the items or the companies that are driving them.

1- Energy technology, risk management, and climate change refashion the world

Energy is arguably the most important industry on the planet. Advances in energy efficiency and sustainable energy sources, combined with the debate and observations of climate change, and new ways of managing capacity risk are coming together to have a profound impact on the social and political structure of the world, as indicated by the Paris Agreement and the recent collapse in energy prices. These trends will deepen into 2016.

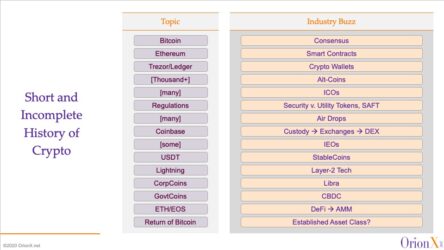

2- Cryptocurrencies drive modernization of money (the original virtualization technology)

Money was the original virtualization technology! It decoupled value from goods, simplified commerce, and enabled the service economy. Free from the limitations of physical money, cryptocurrencies can take a fresh approach to simplifying how value (and ultimately trust, in a financial sense) is represented, modified, transferred, and guaranteed in a self-regulated manner. While none of the existing implementations accomplish that, they are getting better understood and the ecosystem built around them will point the way toward a true digital currency.

3- Autonomous tech remains a fantasy, technical complexity is in fleet networks, and all are subordinate to the legal framework

Whether flying, driving, walking, sailing, or swimming, drones and robots of all kinds are increasingly common. Full autonomy will remain a fantasy except for very well defined and constrained use cases. Commercial success favors technologies that aim to augment a human operator. The technology complexity is not in getting one of them to do an acceptable job, but in managing fleets of them as a single network. But everything will be subordinate to an evolving and complex legal framework.

4- Quantum computing moves beyond “is it QC?” to “What can it do?”

A whole new approach to computing (as in, not binary any more), quantum computing is as promising as it is unproven. Quantum computing goes beyond Moore’s law since every quantum bit (qubit) doubles the computational power, similar to the famous wheat and chessboard problem. So the payoff is huge, even though it is, for now, expensive, unproven, and difficult to use. But new players will become more visible, early use cases and gaps will become better defined, new use cases will be identified, and a short stack will emerge to ease programming. This is reminiscent of the early days of computing so a visit to the Computer History Museum would be a good recalibrating experience.

5- The “gig economy” continues to grow as work and labor are better matched

The changing nature of work and traditional jobs received substantial coverage in 2015. The prospect of artificial intelligence that could actually work is causing fears of wholesale elimination of jobs and management layers. On the other hand, employers routinely have difficulty finding talent, and employees routinely have difficulty staying engaged. There is a structural problem here. The “sharing economy” is one approach, albeit legally challenged in the short term. But the freelance and outsourcing approach is alive and well and thriving. In this model, everything is either an activity-sliced project, or a time-sliced process, to be performed by the most suitable internal or external resources. Already, in Silicon Valley, it is common to see people carrying 2-3 business cards as they match their skills and passions to their work and livelihood in a more flexible way than the elusive “permanent” full-time job.

6- Design thinking becomes the new driver of customer-centric business transformation

With the tectonic shifts in technology, demographic, and globalization, companies must transform or else. Design thinking is a good way to bring customer-centricity further into a company and ignite employees’ creativity, going beyond traditional “data driven needs analysis.” What is different this time is the intimate integration of arts and sciences. What remains the same is the sheer difficulty of translating complex user needs to products that are simple but not simplistic, and beautiful yet functional.

7- IoT: if you missed the boat on cloud, you can’t miss it on IoT too

Old guard IT vendors will have the upper hand over new Cloud leaders as they all rush to claim IoT leadership. IoT is where Big Data Analytics, Cognitive Computing, and Machine Learning come together for entirely new ways of managing business processes. In its current (emerging) condition, IoT requires a lot more vertical specialization, professional services, and “solution-selling” than cloud computing did when it was in its relative infancy. This gives traditional big (and even small) IT vendors a chance to drive and define the terms of competition, possibly controlling the choice of cloud/software-stack.

8- Security: Cloud-native, Micro-zones, and brand new strategies

Cybercrime is big business and any organization with digital assets is vulnerable to attack. As Cloud and Mobile weaken IT’s control and IoT adds many more points of vulnerability, new strategies are needed. Cloud-native security technologies will include those that redirect traffic through SaaS-based filters, Micro-Zones to permeate security throughout an app, and brand new approaches to data security.

9- Cloud computing drives further consolidation in hardware

In any value chain, a vendor must decide what value it offers and to whom. With cloud computing, the IT value chain has been disrupted. What used to be a system is now a piece of some cloud somewhere. As the real growth moves to “as-a-service” procurements, there will be fewer but bigger buyers of raw technology who drive hardware design towards scale and commoditization.

10- Composable infrastructure matures, leading to “Data Center as a System”

The computing industry was down the path of hardware partitioning when virtualization took over, and dynamic reconfiguration of hardware resources took a backseat to manipulating software containers. Infrastructure-as-code, composable infrastructure, converged infrastructure, and rack-optimized designs expand that concept. But container reconfiguration is insufficient at scale, and what is needed is hardware reconfiguration across the data center. That is the next frontier and the technologies to enable it are coming.

11- Mobile devices move towards OS-as-a-Service

Mobile devices are now sufficiently capable that new features may or may not be needed by all users and new OS revs often slow down the device. Even with free upgrades and pre-downloaded OS revs, it is hard to make customers upgrade, while power users jailbreak and get the new features on an old OS. Over time, new capabilities will be provided via more modular dynamically loaded OS services, essentially a new class of apps that are deeply integrated into the OS, to be downloaded on demand.

12- Social Media drives the Analytics Frontier

Nowhere are the demands for scale, flexibility and effectiveness for analytics greater than in social media. This is far beyond Web Analytics. The seven largest “populations” in the world are Google, China, India, Facebook, WhatsApp, WeChat and Baidu, in approximately that order, not to mention Amazon, Apple, Samsung, and several others, plus many important commercial and government applications that rely on social media datasets. Searching through such large datasets with very large numbers of images, social commentary, and complex network relationships stresses the analytical algorithms far beyond anything ever seen before. The pace of algorithmic development for data analytics and for machine intelligence will accelerate, increasingly shaped by social media requirements.

13- Technical Debt continues to accumulate, raising the cost of eventual modernization

Legacy modernization will get more attention as micro-services, data-flow, and scale-out elasticity become established. But long-term, software engineering is in dire need of the predictability and maintainability that is associated with other engineering disciplines. That need is not going away and may very well require a wholesale new methodology for programming. In the meantime, technologies that help automate software modernization, or enable modular maintainability, will gain traction.

14- Tools emerge to relieve the DB-DevOps squeeze

The technical and operational burden on developers has been growing. It is not sustainable. NoSQL databases removed the time-delay and complexity of a data schema at the expense of more complex codes, pulling developers closer to data management and persistence issues. DevOps, on the other hand, has pulled developers closer to the actual deployment and operation of apps with the associated networking, resource allocation, and quality-of-service (QoS) issues. This is another “rubber band” that cannot stretch much more. As cloud adoption continues, development, deployment, and operations will become more synchronized enabling more automation.

15- In-memory computing redefines straight-through apps

The idea of a “memory-only architecture” dates back several decades. New super-large memory systems are finally making it possible to hold entire data sets in memory. Combine this with Flash (and other emerging storage-class memory technologies) and you have the recipe for entirely new ways of achieving near-real-time/straight-through processing.

16- Multi-cloud will be used as a single cloud

Small and mid-size public cloud providers will form coalitions around a large market leader to offer enterprise customers the flexibility of cloud without the lock-in and the risk of having a single supplier for a given app. This opens the door for transparently running a single app across multiple public clouds at the same time.

17- Binary compatibility cracks

It’s been years since most app developers needed to know what CPU their app runs on, since they work on the higher levels of a tall software stack. Porting code sill requires time and effort but for elastic/stateless cloud apps, the work is to make sure the software stack is there and works as expected. But the emergence of large cloud providers is changing the dynamics. They have the wherewithal to port any system software to any CPU thus assuring a rich software infrastructure. And they need to differentiate and cut costs. We are already seeing GPUs in cloud offerings and FPGAs embedded in CPUs. We will also see the first examples of special cloud regions based on one or more of ARM, OpenPower, MIPS, and SPARC. Large providers can now offer a usable cloud infrastructure using any hardware that is differentiated and economically viable, free from the requirement of binary compatibility.

OrionX is a team of industry analysts, marketing executives, and demand generation experts. With a stellar reputation in Silicon Valley, OrionX is known for its trusted counsel, command of market forces, technical depth, and original content.