Mktg_Podcast-50: Sustainable Success, AI vs Search

– Be Creative !?

– Complexity of success

– Repeatability of success

– SBUX re-success

– Nvidia continuous success

– AI vs. Search

The OrionX editorial team manages the content on this website.

– Be Creative !?

– Complexity of success

– Repeatability of success

– SBUX re-success

– Nvidia continuous success

– AI vs. Search

The OrionX editorial team manages the content on this website.

Special guest JP Castlin joins us as we discuss a broad set of topics:

– Did you say “Praxis”?

– Strategy and Marketing

– Apple and DOJ

– Amazon’s Frequency of Sale Events

– Marketing and Math

– Efficiency and Resilience

– Strategy and Change

The OrionX editorial team manages the content on this website.

– Marketing Mix, can you brand, nurture, activate, sell all at the same time?

– Marketing of Apple Vision Pro

– Market Research, Quantitative, Qualitative, pitfalls

– Strategy, Change Management, Praxis

The OrionX editorial team manages the content on this website.

– Competence v. Confidence v. Complexity

– Bad Data v. Bad Attitude

– AI-Generated Content’s Copyright and DRM

– Strategy v. Change Management

The OrionX editorial team manages the content on this website.

Major news since our last (double edition) episode included what’s billed as the fastest AI supercomputer by Google, price hikes on chips by TSMC and Samsung, visualization of a black hole in our own galaxy, and IBM’s ambitious and well-executed quantum computing roadmap. We discuss how an AI supercomputer is different, an unexpected impact of chip shortages and price hikes, what it takes to visualize a black hole, and what IBM’s strategy looks to us from a distance.

The OrionX editorial team manages the content on this website.

Part 1: Strategy to Organization

In part one of this webinar series, we show you how to navigate organizational issues to build a trusted Competitive Intelligence function. We discuss various organizational models, key competitive processes, and the big rules that govern a successful effort.

Part 2: Organization to Intelligence

In part two of this webinar series, we look at techniques to collect and transform data into useful, actionable intelligence. This is where analytical methods prepare the ingredients but “synthetical” skills are required to transform those ingredients into the right position, a compelling competitive value proposition, and a sharp narrative.

Part 3: Intelligence to Action

In part three of this webinar series, you will learn the basic principles of how to turn competitive data/research into actionable sales resources (e.g., Beat Sheets, Sales Playbooks, competitive customer wins, etc.) You will also learn best practices for how to organize, maintain and provide access to competitive material—all based on our 20+ years of experience building competitive intelligence functions for tech companies.

OrionX is a team of industry analysts, marketing executives, and demand generation experts. With a stellar reputation in Silicon Valley, OrionX is known for its trusted counsel, command of market forces, technical depth, and original content.

All the technologies that we study, track, and help market have significant global impact. That awareness very much informs our writing. Sometimes, we address global and national security issues more directly. See for example National Security and Blockchain Technology. We will be writing more in that vein as the impact of technology on policy becomes more visible. Here is an exceprt and outline of an article published on LinkedIn.

Policy decisions to re-open the economy will be complex. They will also be politically influenced at a time when there is little room for error and decisions must be based on science and accurate data. This is not to say that we must ignore the unpredictabilities of our time. To the contrary, this is about preparedness and the ability to not just anticipate, but to respond to changes. It is about best-possible outcomes, not efficiency to its own sake.

I hope the slow-down in new cases continues to zero. But it is important to be prepared for a plateau vs. a continuous drop to zero, and to expect it to come back if we declare victory too soon without enough measures in place that would keep it down.

There are several ways we can address the problem. They all contribute to whatever policy we adopt. Only real progress counts, not claims and hopes.

Read the full article here.

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

National Security considerations are often intimately intertwined with the adoption of new technologies.

Last year Tokyo hosted a meeting of the International Standards Organization, including a session on blockchain technology to examine ideas around standards for blockchain and distributed ledgers.

A member of the Russian delegation, who is part of their intelligence apparatus at the FSB, apparently said “the Internet belonged to the US, the Blockchain will belong to Russia.” In fact three of the four Russian delegates were FSB agents! By contrast, Chinese attendees were from the Finance Ministry, and American attendees were representing major technology companies, reportedly IBM and Microsoft among others.

Let’s unpack this a bit. The Internet grew out of a US military funded program, Arpanet, and the US has been the dominant player in Internet technology due to the strength of its research community and its technology companies in particular.

As we wrote in our most recent blog (https://orionx.net/2018/05/is-blockchain-the-key-to-web-3-0/), blockchain has the potential to significantly impact the Internet’s development, as a key Web 3.0 technology.

Blockchain and the first cryptocurrency, Bitcoin, were developed by an unknown person or persons, with pen name Satoshi Nakamoto. Based on email timestamps, the location may have been New York or London, so American or British citizenship for Bitcoin’s inventor seem likely, but that is speculation.

More to the point, the US is the center of blockchain funding and development activity, while China in particular has been playing a major role in mining and cryptocurrency development.

There are many Russian and Eastern European developers and ICO promoters in the community as well. The Baltic nations bordering Russia and the Russian diaspora community have been particularly active.

The second most valuable cryptocurrency after Bitcoin is Ethereum, which was invented by a Russian-Canadian, Vitalik Buterin. Buterin famously met with Russian President Vladimir Butin in 2017. Putin is himself of course a former intelligence agent.

The Russians reportedly want to influence the cryptographic standards around blockchain. This immediately raises fears of a backdoor accessible to Russian intelligence. Russia is also considering the idea of a cryptocurrency as a way to get around sanctions imposed by the American and European governments.

The Russian government has a number of blockchain projects. The government-run Sberbank had initial implementation of a document storage blockchain late last year. There is draft regulation around cryptocurrency working its way through the Russian parliament. President Putin has said that Russia cannot afford to fall behind in blockchain technology.

Given the broad array of applications being developed for cryptocurrencies, including money transfer, asset registration, identity, voting, data security, and supply chain management among others, national governments have critical interests in the technology.

China has been cracking down on ICOs and mining, but it is clear they think blockchain is important and they want to be in control. Most of their government concerns and interest appear to be centered around the potential in finance, such as examining the possibility of a national cryptocurrency (cryptoYuan).

China would like to wriggle free from the dollar standard that dominates trade and their currency reserves. They have joined the SDR (foreign reserve assets of the IMF) and have been building their stocks of gold as two alternatives to the dollar.

China’s biggest international initiative is around a new ‘Silk Road’, the One Belt, One Road initiative for infrastructure development across EurAsia and into the Middle East and Africa. One could imagine a trading currency in conjunction with this, a “SilkRoadCoin”. In fact, the government-run Belt and Development Center has just announced an agreement with Matrix AI as blockchain partner. Matrix AI is developing a blockchain that will support AI-based consensus mechanisms and intelligent contracts.

China’s One Belt One Road Initiative actually has six land corridors and a maritime corridor.

(Image credit: CC 4.0, author: Lommes)

The American military is taking interest in blockchain technology. DARPA believes that blockchain may be useful as a cybersecurity shield. The US Navy has a manufacturing related application around the concept of Digital Thread for secure registration of data across the supply chain.

In fact the latest National Defense Authorization Act requires the Pentagon to assess the potential of blockchain for military deployment and to report to Congress their findings, beginning this month for an initial report.

What is clear, is that blockchain and distributed ledger technology have the potential to be of major significance in national security and development for the world’s leading nations.

A range of efforts are underway by government, industry, and academia to understand blockchain technology and cryptocurrencies, to enhance the technology, and plan for the future. In that context, we see potential in the technology to impact many facets of society and global dynamics. That potential is now sufficiently developed for us to advocate a more visible presence by government agencies in helping shape the policy and academic research.

We encourage the US government to increase engagement with blockchain and distributed ledger technology. This can include funding research in universities, pilot projects with industry across various government agencies including the military and intelligence communities, the Federal Reserve, and the Department of Energy, NOAA and NASA, in particular.

Also the federal government should pursue standards development under the auspices of the NIST and together with ISO. Individual state governments are also promising laboratories for projects around identity, voting, and title registration.

Information has always been key to warfare. But there is little doubt that warfare is increasingly moving toward a battlefield within the information sphere itself. These are wars directed against the civilian population; these are wars for peoples’ minds. Blockchain technologies could play a significant role in these present and future battles, both defensively and offensively.

America was founded and grew rapidly largely in the context of the Industrial Revolution. The Information Age provides a similar opportunity and responsibility to set the course for the next century and beyond. As before, getting it right will not only assure the country’s continued success and leadership. It also arms the nation to solve problems that affect all of humanity.

References :

DARPA https://www.google.co.th/amp/s/cointelegraph.com/news/pentagon-thinks-blockchain-technology-can-be-used-as-cybersecurity-shield/amp

US Navy http://www.secnav.navy.mil/innovation/Pages/2017/06/BlockChain.aspx

2018 National Defense Authorization Act https://www.realcleardefense.com/articles/2018/05/03/could_americas_cyber_competitors_use_blockchain_for_their_defense_113400.html

NIST https://csrc.nist.gov/publications/detail/nistir/8202/draft

http://www.scmp.com/business/china-business/article/2136188/beijing-signals-it-wants-become-front-runner-blockchain

https://www.cbinsights.com/research/future-of-information-warfare/

Stephen Perrenod has lived and worked in Asia, the US, and Europe and possesses business experience across all major geographies in the Asia-Pacific region. He specializes in corporate strategy for market expansion, and cryptocurrency/blockchain on a deep foundation of high performance computing (HPC), cloud computing and big data. He is a prolific blogger and author of a book on cosmology.

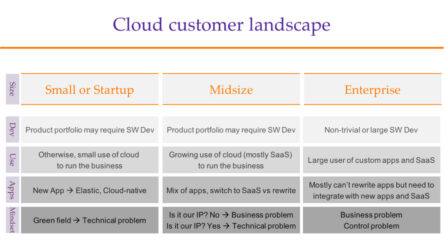

There is a loudly expressed opinion that ‘the cloud’ is the future of computing and that companies such as Amazon, Google, and Microsoft will dominate the market, replacing established hardware and software vendors such as IBM, Dell, HPE, and Oracle. In some ways, this is true especially for startups, small companies, and perhaps even some midsize companies, but the situation for enterprise level deployments is very different.

For small companies, the use of cloud services minimizes the need for capital expenditure on infrastructure and the recruitment of IT specialists, accelerating their time to production, possibly at the expense of some customization.

By contrast, enterprise level organizations typically have a substantial reliance on established business critical applications. Many of these applications cannot easily be rewritten or re-deployed on public cloud architectures. Sometimes the systems they run on are not based on the x86 architecture, further complicating matters.

Existing enterprise applications, however, can and often need to be integrated with new cloud services to deliver a modern infrastructure as a cloud service while ensuring business continuity. This hybrid approach is an optimum combination of private and public cloud services. It leverages existing data center and infrastructure resources and combines elastic cloud services from external providers when needed. For businesses where regulation and compliance may affect where and how data may be located and accessed, or if large memory scale up data systems may be UNIX-based and are not well-suited or compatible with scale out Intel-based architectures, a hybrid cloud is particularly advantageous.

Enterprise customers are much more concerned with a business solution to their cloud problem than just a technical solution. Minimizing costly changes is valuable to them as long as the underlying technology has a reasonable and real roadmap. For them, a mixture of private and public cloud can address multiple requirements:

This approach focuses on business optimization, integrates and consolidates disparate systems, offers a flexible choice of on-premises, managed on-premises, or off-premises cloud.

For enterprise customers, this is a critical transformation. The service provider model is generally accepted as the way forward, but successfully navigating the journey requires the integration of existing business critical systems with new and agile applications. This requires differentiation at a business service level, it is not technology driven but must incorporate both the old and the new while optimizing capital costs and operational margins.

Cloud computing means fewer but larger buyers of infrastructure technologies. Cloud service providers such as Amazon and Google buy standardized systems in large volumes. Other CPU and infrastructure vendors have doubled down on the capabilities of their architectures to support both legacy and modern systems.

Among established technologies, x86 continues to dominate at the CPU level but is unable to reach a large body of enterprise applications that were written for IBM, HP, and Oracle/Sun. HP has effectively moved to x86 and is not offering its own public cloud service. IBM has formed the OpenPower consortium as it attempts to build an ecosystem and is building its Softlayer cloud service, but relies on application software partners to complete the stack. This leaves Oracle in the unique position of offering chips-to-apps-to-cloud in one place supporting x86 as well as its own SPARC chip (with the SPARC Dedicated Compute Service). As the only UNIX public cloud service available from a major systems or cloud vendor, Oracle has a robust roadmap with everything available on-premises, managed-on-premises, and off-premises.

For most enterprises, a successful transition to cloud service-based infrastructures is more important than a rapid transition. There are many market research reports that discuss the nature and pace of cloud adoption in ‘the Enterprise’, but one thing they have in common is the limited pace and scope of public cloud adoption. At the end of the day, it is business and workload considerations that drive decisions, not technology.

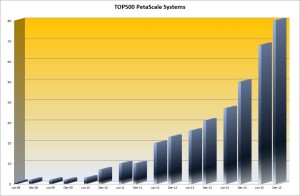

While the graph (same link as the image) is slightly dated (2015), the trends have not changed and the presented results are still indicative. The common trends demonstrate that dependence upon isolated on-premises systems – even if virtualized – is declining. Private cloud, whether on-premises or hosted is and will be a substantial part of the market, but the dominant and growing sector that seems destined to dominate is hybrid cloud, a combination of on-premises, hosted and public cloud services.

For many enterprises, the challenge is to find a vendor that can offer all of those things with a viable business model. We live in very interesting times when the only global technology vendor that appears to be able to meet all the criteria is Oracle, with a viable alternative to Intel Architecture in the form of SPARC.

The OrionX editorial team manages the content on this website.

Here at OrionX.net, our research agenda is driven by the latest developments in technology. We are also fortunate to work with many tech leaders in nearly every part of the “stack”, from chips to apps, who are driving the development of such technologies.

In recent years, we have had projects in Cryptocurrencies, IoT, AI, Cybersecurity, HPC, Cloud, Data Center, … and often a combination of them. Doing this for several years has given us a unique perspective. Our clients see value in our work since it helps them connect the dots better, impacts their investment decisions and the options they consider, and assists them in communicating their vision and strategies more effectively.

We wanted to bring that unique perspective and insight directly to you. “Simplifying the big ideas in technology” is how we’d like to think of it.

Thus was born our first podcast/slidecast series: The OrionX Download™

The OrionX Download is both a video slidecast (visuals but no talking heads) and an audio podcast in case you’d like to listen to it.

Every two weeks, co-hosts Dan Olds and Shahin Khan, and other OrionX analysts, discuss some of the latest and most important advances in technology. If our research has a specially interesting finding, we’ll invite guests to probe the subject and add their take.

Please give it a try and let us know what we can do better or if you have a specific question or topic that you’d like us to cover.

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

Just as Intel, the king of CPUs and the very bloodstream of computing announced that it is ending its Intel Developer Forum (IDF) annual event, this week in San Jose, NVIDIA, the king of GPUs and the fuel of Artificial Intelligence is holding its biggest GPU Technology Conference (GTC) annual event yet. Coincidence? Hardly.

With something north of 95 per cent market share in laptops, desktops, and servers, Intel-the-company is far from even looking weak. Indeed, it is systematically adding to its strengths with a strong x86 roadmap, indigenous GPUs of its own, acquisition of budding AI chip vendors, pushing on storage-class memory, and advanced interconnects.

But a revolution is nevertheless afoot. The end of CPU-dominated computing is upon us. Get ready to turn the page.

Digitization means lots of data, and making sense of lots of data increasingly looks like either an AI problem or an HPC problem (which are similar in many ways, see “Is AI the Future of HPC?”). Either way, it includes what we call High Density Processing: GPUs, FPGAs, vector processors, network processing, or other, new types of accelerators.

GPUs made deep neural networks practical, and it turns out that after decades of slow progress in AI, such Deep Learning algorithms were the missing ingredient to make AI effective across a range of problems.

NVIDIA was there to greet this turn of events and has ridden it to strong leadership of a critical new wave. It’s done all the right things and executed well in its usual competent manner.

A fast-growing lucrative market means competition, of course, and a raft of new AI chips is around the corner.

Intel already acquired Nervana and Movidius. Google has its TPU, and IBM its neuromorphic chip, TrueNorth. Other AI chip efforts include Mobileye (the company is being bought by Intel), Graphcore, BrainChip, TeraDeep, KnuEdge, Wave Computing, and Horizon Robotics. In addition, there are several well-publicized and respected projects like NeuRAM3, P-Neuro, SpiNNaker, Eyeriss, and krtkl going after different parts of the market.

One thing that distinguishes Nvidia is how it addresses several markets with pretty much a single underlying platform. From automobiles to laptops to desktop to gaming to HPC to AI, the company manages to increase its Total Available Market (TAM) with minimal duplication of effort. It remains to be seen whether competitive chips that are super optimized for just one market can get enough traction to pose a serious threat.

The world of computing is changing in profound ways and chips are once again an area of intense innovation. The Silicon in Silicon Valley is re-asserting itself in a most welcome manner.

________

Note: A variation of this article was originally published in The Register, “The rise of AI marks an end to CPU dominated computing”.

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

A variation of this article was originally published in The Register, “Wow, what an incredible 12 months: 2017’s data center year in review”, Feb 6, 2017.

The data center market is hot, especially now that we are getting a raft of new stuff, from promising non-Intel chips and system architectures to power and cooling optimizations to new applications in Analytics, IoT, and Artificial Intelligence.

Here is my Top-10 Data Center Predictions for 2017:

Data centers are information factories with lots of components and moving parts. There was a time when companies started becoming much more complex, which fueled the massive enterprise resource planning market. Managing everything in the data center is in a similar place. To automate, monitor, troubleshoot, plan, optimize, cost-contain, report, etc, is a giant task, and it is good to see new apps in this area.

Data center infrastructure management provides visibility into and helps control IT. Once it is deployed, you’ll wonder how you did without it. Some day, it will be one cohesive thing, but for now, because it’s such a big task, there will be several companies addressing different parts of it.

Cloud is the big wave, of course, and almost anything that touches it is on the right side of history. So, private and hybrid clouds will grow nicely and they will even temper the growth of public clouds. But the growth of public clouds will continue to impress, despite increasing recognition that they are not the cheapest option, especially for the mid-size users.

AWS will lead again, capturing most new apps. However, Azure will grow faster, on the strength of both landing new apps and also bringing along existing apps where Microsoft maintains a significant footprint.

Moving Exchange, Office, and other apps to the cloud, in addition to operating lots of regional data centers and having lots of local feet on the ground will help.

Some of the same dynamics will help Oracle show a strong hand and get close to Google and IBM. And large telcos will stay very much in the game. Smaller players will persevere and even grow, but they will also start to realize that public clouds are their supplier or partner, not their competition! It will be cheaper for them to OEM services from bigger players, or offer joint services, than to build and maintain their own public cloud.

Just as the hottest thing in enterprise becomes Big Data, we find that the most expensive part of computing is moving all that data around. Naturally, we start seeing what OrionX calls “In-Situ Processing” (see page 4 of the report in the link): instead of data going to compute, compute would go to data, processing it locally wherever data happens to be.

But as the gap between CPU speed and storage speed separates apps and data, memory becomes the bottleneck. In comes storage class memory (mostly flash, with a nod to other promising technologies), getting larger, faster and cheaper. So, we will see examples of apps using a Great Wall of persistent memory, built by hardware and software solutions that bridge the size/speed/cost gap between traditional storage and DRAM. Eventually, we expect programming languages to naturally support byte-addressable persistent memory.

Vendors already configure and sell racks, but they often populate them with servers that are designed as if they’d be used stand-alone. Vendors with rack-level thinking have been doing better because designing the rack vs the single node lets them add value to the rack while removing unneeded value from server nodes.

So server vendors will start thinking of a rack, not a single-node, as the system they design and sell. Intel’s Rack Scale Architecture has been on the right track, a real competitive advantage, and an indication of how traditional server vendors must adapt. The server rack will become the next level of integration and what a “typical system” will look like. Going forward, multi-rack systems are where server vendors have a shot at adding real value. HPC vendors have long been there.

Traditional enterprise apps – the bulk of what runs on servers – will show that they have access to enough compute capacity already. Most of that work is transactional, so their growth is correlated with the growth in GDP, minus efficiencies in processing.

New apps, on the other hand, are hungry, but they are much more distributed, more focused on mobile clients, and more amenable to what OrionX calls “High-Density Processing”: algorithms that have a high ops/bytes ratio running on hardware that provides similarly high ops/byte capability – ie, compute accelerators like GPUs, FPGAs, vector processors, manycore CPUs, ASICs, and new chips on the horizon.

On top of that, there will be more In-Situ Processing: processing the data wherever it happens to be, say locally on the client vs sending it around to the backend. This will be made easier by the significant rise in client-side computing power and more capable data center switches and storage nodes that can do a lot of local processing.

We will also continue to see cloud computing and virtualization eliminate idle servers and increase the utilization rates of existing systems.

Finally, commoditization of servers and racks, driven by fewer-but-larger buyers and standardization efforts like the Open Compute Project, put pressure on server costs and limit the areas in which server vendors can add value. The old adage in servers: “I know how to build it so it costs $1m, but don’t know how to build it so it’s worth $1m” will be true more than ever.

These will all combine to keep server revenues in check. We will see 5G’s wow-speeds but modest roll-out, and though it can drive a jump in video and some server-heavy apps, we’ll have to wait a bit longer.

The real battle in server architecture will be between Intel’s in-house coalition and what my colleague Dan Olds has called the Rebel Alliance: IBM’s OpenPower industry coalition. Intel brings its all-star team: Xeon Phi, Altera, Omni-Path (plus Nervana/Movidius) and Lustre, while OpenPower counters with a dream team if its own: POWER, Nvidia, Xilinx, and Mellanox (plus TrueNorth) and GPFS (Spectrum Scale). Then there is Watson which will become more visible as a differentiator, and a series of acquisitions by both camps as they fill various gaps.

The all-in-house model promises seamless integration and consistent design, while the extended team offers a best-of-breed approach. Both have merits. Both camps are pretty formidable. And there is real differentiation in strategy, design, and implementation. Competition is good.

One day in the not too distant future, your fancy car will break down on the highway for no apparent reason. It will turn out that the auto entertainment system launched a denial of service attack on the rest of the car, in a bold attempt to gain control. It even convinced the nav system to throw in with it! This is a joke, of course, but could become all too real if/when human and AI hackers get involved.

IoT is coming, and with it will come all sorts of security issues. Do you know where your connected devices are? Can you really trust them?

For the first time in decades, there is a real market opening for new chips. What drove this includes:

This will be a year when many new chips became available and tested, and there is a pretty long list of them, showing just how big the opportunity is, how eager investors must have been to not miss out, and how many different angles there are.

In addition to AI, there are a few important general-purpose chips being built. The coolest one is by startup Rex Computing, which is working on a chip for exascale, focused on very high compute-power/electric-power ratios. Qualcomm and Caviuum have manycore ARM processors, Oracle is advancing SPARC quite well, IBM’s POWER continues to look very interesting, and Intel and AMD push the X86 envelope nicely.

With AI chips, Intel already has acquired Nervana and Movidius. Google has its TPU, and IBM its neuromorphic chip, TrueNorth. Other AI chip efforts include Mobileye (the company is being bought by Intel since this article was written), Graphcore, BrainChip, TeraDeep, KnuEdge, Wave Computing, and Horizon Robotics. In addition, there are several well-publicized and respected projects like NeuRAM3, P-Neuro, SpiNNaker, Eyeriss, and krtkl going after different parts of the market. That’s a lot of chips, but most of these bets, of course, won’t pay off.

Speaking of chips, ARM servers will remain important but elusive. They will continue to make a lot of noise and point to significant wins and systems, but fail to move the needle when it comes to revenue market share in 2017.

As a long-term play, ARM is an important phenomenon in the server market – more so now with the backing of SoftBank, a much larger company apparently intent on investing and building, and various supercomputing and cloud projects that are great proving grounds.

But at the end, you need differentiation and ARM has the same problem against X86 as Intel’s Atom had against ARM. Atom did not differentiate vs ARM any more than ARM is differentiating vs Xeon.

Most systems end up being really good at something, however, and there are new apps and an open-source stack to support existing apps, which will help find specific workloads where ARM implementations could shine. That will help the differentiation become more than “it’s not X86”.

What is going on with big new apps? They keep getting more modular (microservices), more elastic (scale out), and more real-time (streaming). They’ve become their own large graph, in the computer science sense, and even more so with IoT (sensors everywhere plus In-Situ Processing).

As apps became services, they began resembling a network of interacting modules, depending on each other and evolving together. When the number of interacting pieces keeps increasing, you’ve got yourself a fabric. But as an app, it’s the kind of fabric that evolves and has an overall purpose (semantic web).

Among engineering disciplines, software engineering already doesn’t have a great reputation for predictability and maintainability. More modularity is not going to help with that.

But efforts to manage interdependence and application evolution have already created standards for structured modularity like the OSGi Alliance for Java. Smart organizations have had ways to reduce future problems (technical debt) from the get-go. So, it will be nice to see that type of methodology get better recognized and better generalized.

OK, we stop here now, leaving several interesting topics for later.

What do you think?

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

Vector processing was an unexpected topic to emerge from the International Supercomputing Conference (ISC)held last week.

On the Monday of the conference, a new leader on the TOP500 list was announced. The Sunway TaihuLight system uses a new processor architecture that is Single-Instruction-Multiple-Data (SIMD) with a pipeline that can do eight 64-bit floating-point calculations per cycle.

This started us thinking about vector processing, a time-honored system architecture that started the supercomputing market. When microprocessors advanced enough to enable massively parallel processing (MPP) systems and then Beowulf and scale-out clusters, the supercomputing industry moved away from vector processing and led the scale-out model.

Later that day, at the “ISC 2016 Vendor Showdown”, NEC had a presentation about its project “Aurora”. This project aims to combine x86 clusters and NEC’s vector processors in the same high bandwidth system. NEC has a long history of advanced vector processors with its SX architecture. Among many achievements, it built the Earth Simulator, a vector-parallel system that was #1 on the TOP500 list from 2002 to 2004. At its debut, it had a substantial (nearly 5x) lead over the previous #1.

Close integration of accelerator technologies with the main CPU is, of course, a very desirable objective. It improves programmability and efficiency. Along those lines, we should also mention the Convey system, which goes all the way, extending the X86 instruction set, and performing the computationally intensive tasks in an integrated FPGA.

A big advantage of vector processing is that it is part of the CPU with full access to the memory hierarchy. In addition, compilers can do a good job of producing optimized code. For many codes, such as in climate modelling, vector processing is quite the right architecture.

Vector parallel systems extended the capability of vector processing and reigned supreme for many years, for very good reasons. But MPPs pushed vector processing back, and GP-GPUs pushed it further still. GPUs leverage the high volumes that the graphics market provides and can provide numerical acceleration with some incremental engineering.

But as usual, when you scale more and more, you scale not just capability, but also complexity! Little inefficiencies start adding up until they become a serious issue. At some point, you need to revisit the system and take steps, perhaps drastic steps. The Sunway TaihuLight system atop the TOP500 list is an example of this concept. And there are new applications like deep learning that look like they could use vectors to quite significant advantage.

There are other efforts towards building new exascale-class CPUs such, the “Neo processor” that Rex Computing is developing.

Will vector processing re-emerge as a lightweight, high-performing architecture?

What is the likelihood?

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

Today, we are announcing the OrionX Constellation™ research framework as part of our strategy services.

Paraphrasing what I wrote in my last blog when we launched 8 packaged solutions:

One way to see OrionX is as a partner that can help you with content, context, and dissemination. OrionX Strategy can create the right content because it conducts deep analysis and provides a solid perspective in growth segments like Cloud, HPC, IoT, Artifical Intelligence, etc. OrionX Marketing can put that content in the right context by aligning it with your business objectives and customer needs. And OrionX PR can build on that with new content and packaging to pursue the right dissemination, whether by doing the PR work itself or by working with your existing PR resources.

Even if you only use one of the services, the results are more effective because strategy is aware of market positioning and communication requirements; marketing benefits from deep industry knowledge and technical depth; and PR can set a reliable trajectory armed with a coherent content strategy.

The Constellation research is all about high quality content. That requires the ability to go deep on technologies, market trends, and customer sentiment. Over the past two years, we have built such a capability.

Given the stellar connotations of the name Orion (not to mention our partner Stephen Perrenod’s book and blog about quantum and astro- physics), it is natural that we would see the players in each market segment as stars in a constellation. Constellations interact and evolve, and stars are of varying size and brightness, their respective positions dependent on cosmic (market) forces and your (customers’) point of view. Capturing that reality in a relatively simple framework has been an exciting achievement that we are proud to unveil.

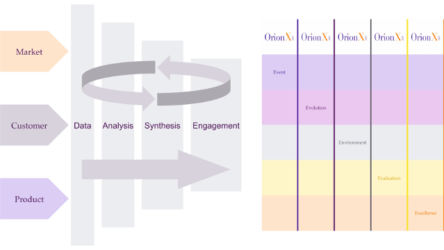

The OrionX strategy process collects and organizes data in three categories: market, customer, and product. The Constellation process further divides that into two parameters in each category:

This data is then analyzed to identify salient points which are in turn synthesized into the coherent insights that are necessary for a clear understanding of the dynamics at play and effective market conversations and change management.

The resulting content leads to five types of reports that capture the OrionX perspective on a segment. We call these the five Es:

Visualizing six distinct parameters, each with its own axis, in three categories cannot be done with the traditional 2×2 or 3×3 diagrams. Indeed, existing models in the industry do quite a good job of providing simple diagrams that rank vendors in a segment.

To simplify this visualization, we have developed the OrionX Constellation Decision Tool. This diagram effectively visualizes the six parameters that capture the core of technology customers’ selection process. (A future blog will drill down into the diagram.)

While OrionX is not a typical industry analyst firm, we will offer samples of our reports from time to time. We hope they are useful to you and provide a glimpse of our work. They will complement our blog.

As always, thank you for your support and friendship. Please track our progress on social media or contact us for a free consultation or with any comments. We’d love to hear from you.

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

Competitive analysis (competitive intelligence) is critically important for strategy, planning, and sales support. But it should also permeate an organization’s DNA, preserve high ethical standards, and deliver high quality content. Competitive awareness is like security awareness or commitment to quality – it’s the duty of everyone in the organization. This is what we call a “Competitive Culture”.

A competitive culture means everyone in the organization is tuned in to the competitive realities the company and its products and services face. It is not a one-time activity, but an essential ongoing requirement. It must evolve continuously as competitors strengthen and fade, new competitors enter the market, and as you introduce new offerings or pursue new strategies.

The competitive function takes input from and supports various stakeholders, including sales, marketing, executive management, and engineering. As it matures within the organization, it evolves into both a back-office function, with its own processes and roadmap, as well as a collective effort for the organization at large.

Everyone in the company can contribute to intelligence about the competition and can use it to enhance their work performance. And everyone must protect information that, if known by competitors, would decrease the company’s competitiveness.

OrionX has a history of excellence in developing best practices and tools across the competitive intelligence discipline and we offer a range of packages. The starter package provides:

* Target market / solutions / product roadmap review

* Program objectives, elements, timelines, calendar, dashboard

* Competitive SWOT, playbook, head-to-head comparisons, beat sheets, and more

“A very critical requirement for every company and every organization is competitive analysis. It is something that needs to be done with high integrity but also with high quality. And it requires the development of processes and the ability to look at your competition and understand why customers might be buying [from] them, and how they evaluate you based on the competition. We have developed best practices and have effectively worked with clients to arrive at a competitive intelligence function that you can be proud of.”

– Shahin Khan, Partner and Founder, OrionX

Please contact us if you would like to enhance your company’s competitive stance. Additional options beyond the starter package are available that would include ongoing execution, internal sales campaign, public campaigns, and corporate and business programs.

Stephen Perrenod has lived and worked in Asia, the US, and Europe and possesses business experience across all major geographies in the Asia-Pacific region. He specializes in corporate strategy for market expansion, and cryptocurrency/blockchain on a deep foundation of high performance computing (HPC), cloud computing and big data. He is a prolific blogger and author of a book on cosmology.

“Just how big a market is this?” If you are an entrepreneur raising money or a seasoned product manager, or a CEO planning on an IPO, someone is going to ask you that question and you need to be well prepared with the answer. Market sizing is an important and common requirement. It is an especially challenging task when you are creating a new market or addressing emerging opportunities. This is why we created a packaged solution focused on market sizing.

Market sizing can be defined as the number of buyers of your product, or users of your service, and the price they are willing to pay to get them. Given the number of users and average solution pricing calculating the market size, growth and expected market share is now possible, for example.

The main purpose of market sizing is to inform business or product viability, specifically go/no-go decisions, as well as key marketing decisions, such as pricing of the service, resource planning, or marketing tactics to increase usage, together with an estimate of the level of operational and technological capabilities required to service the expected market.

There are two main market-sizing methods: bottom-up and top-down, each with unique benefits and challenges.

Organizations can draw on several sources for market sizing data. For example:

When doing market analysis, market sizing is frequently referred to as TAM, SAM, and SOM, acronyms that represents different subsets of a market. But what do they mean and why are they useful to investors or product development executives?

Depending on your company’s product or service calculating TAM, SAM and SOM will be very different. A hardware-based company may be constrained by geography because of the service and support requirement. Whereas, today’s software company does not have these same constraints as distribution is via the Internet and by default it is global. Similarly a startup is very different to an established company, XZY Widgets versus IBM for example.

Still confused about TAM SAM SOM? Here is an example.

Let’s say your company is a storage startup and planning to introduce a new storage widget. Your TAM would be the worldwide storage market. If your company had business operations in every country and there was no competition, then your revenue would be equivalent to the TAM, 100% market share — highly unlikely.

As a storage startup organization, it would be more realistic to focus on prospects within your city or state. Geographic proximity is an important consideration at this early stage as you have limited distribution and support capability, close proximity makes sense. This would be your SAM: the demand for your storage products within your reach. If you were the only storage provider in your state, then you would generate 100% of the revenue. Again, highly unlikely.

Realistically, you can hope to capture only part of the SAM. With a new storage product you would attract early adopters of your new widget within your selected geography. This is your SOM.

TAM SAM SOM have different purposes: SOM indicates the short-term sales potential, SOM / SAM the target market share, and TAM the potential at scale. These all play an important role in assessing the investment opportunity and the focus should really be on getting the most accurate numbers rather than the biggest possible numbers.

In-depth industry knowledge, logical analysis, and a command of business processes are some of the necessary ingredients used by OrionX to arrive at reliable market size projections based on available data. The analysis can then be customized for executive updates, due-diligence, the pricing process, market share objectives, or manufacturing capacity planning.

The OrionX editorial team manages the content on this website.

We are excited to announce 8 new packaged solutions designed to address several common requirements. Here’s a link to the press release we issued today.

One way to see OrionX is as a partner that can help you with content, context, and dissemination. OrionX Strategy can create the right content because it conducts deep analysis and provides a solid perspective in important technology developments like IoT, Cloud, Hyperscale and HPC, Big Data, Cognitive Computing and Machine Learning, Security, Cryptocurrency, In-Memory Computing, etc. OrionX Marketing can put that content in the right context by aligning it with your business objectives and customer requirements. And OrionX PR can build on that with new content and packaging to pursue the right dissemination, whether by doing the PR work itself or by working with your existing PR resources.

In all cases, the interactions among these three areas makes OrionX especially effective. Strategy is aware of market positioning and communication requirements; Marketing benefits from deep industry knowledge and technical depth; and PR can create engaging content that is not just interesting but also insightful, etc.

Having helped over 50 technology leaders connect products to customers, we have seen several common requirements. It is different in every case, but business objectives are similar and the process can be substantially the same. Over the years, our solutions to these requirements have developed into what we can now unveil as packaged solutions: well-defined, targeted, and easy to adopt.

The packages being introduced today are:

We are also offering a free consultation with one of our partners. In almost every occasion, we’ve been told that such consultations have saved our clients time and money and given them new insights. It’s been a good way for us to show our commitment to your success, and whether or not it leads to business, it has always led to new relationships and good word of mouth.

Please take a moment to look at the new packages and let us know what you think.

Shahin is a technology analyst and an active CxO, board member, and advisor. He serves on the board of directors of Wizmo (SaaS) and Massively Parallel Technologies (code modernization) and is an advisor to CollabWorks (future of work). He is co-host of the @HPCpodcast, Mktg_Podcast, and OrionX Download podcast.

Here at OrionX.net, we are fortunate to work with tech leaders across several industries and geographies, serving markets in Mobile, Social, Cloud, and Big Data (including Analytics, Cognitive Computing, IoT, Machine Learning, Semantic Web, etc.), and focused on pretty much every part of the “stack”, from chips to apps and everything in between. Doing this for several years has given us a privileged perspective. We spent some time to discuss what we are seeing and to capture some of the trends in this blog: our 2016 technology issues and predictions. We cut it at 17 but we hope it’s a quick read that you find worthwhile. Let us know if you’d like to discuss any of the items or the companies that are driving them.

Energy is arguably the most important industry on the planet. Advances in energy efficiency and sustainable energy sources, combined with the debate and observations of climate change, and new ways of managing capacity risk are coming together to have a profound impact on the social and political structure of the world, as indicated by the Paris Agreement and the recent collapse in energy prices. These trends will deepen into 2016.

Money was the original virtualization technology! It decoupled value from goods, simplified commerce, and enabled the service economy. Free from the limitations of physical money, cryptocurrencies can take a fresh approach to simplifying how value (and ultimately trust, in a financial sense) is represented, modified, transferred, and guaranteed in a self-regulated manner. While none of the existing implementations accomplish that, they are getting better understood and the ecosystem built around them will point the way toward a true digital currency.

Whether flying, driving, walking, sailing, or swimming, drones and robots of all kinds are increasingly common. Full autonomy will remain a fantasy except for very well defined and constrained use cases. Commercial success favors technologies that aim to augment a human operator. The technology complexity is not in getting one of them to do an acceptable job, but in managing fleets of them as a single network. But everything will be subordinate to an evolving and complex legal framework.

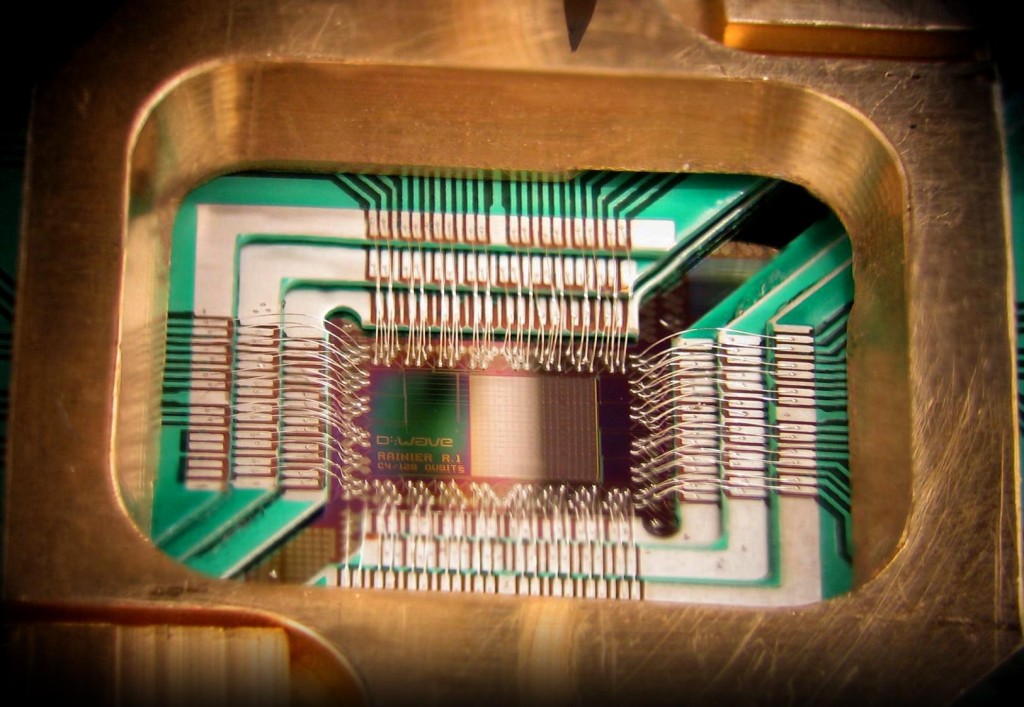

A whole new approach to computing (as in, not binary any more), quantum computing is as promising as it is unproven. Quantum computing goes beyond Moore’s law since every quantum bit (qubit) doubles the computational power, similar to the famous wheat and chessboard problem. So the payoff is huge, even though it is, for now, expensive, unproven, and difficult to use. But new players will become more visible, early use cases and gaps will become better defined, new use cases will be identified, and a short stack will emerge to ease programming. This is reminiscent of the early days of computing so a visit to the Computer History Museum would be a good recalibrating experience.

The changing nature of work and traditional jobs received substantial coverage in 2015. The prospect of artificial intelligence that could actually work is causing fears of wholesale elimination of jobs and management layers. On the other hand, employers routinely have difficulty finding talent, and employees routinely have difficulty staying engaged. There is a structural problem here. The “sharing economy” is one approach, albeit legally challenged in the short term. But the freelance and outsourcing approach is alive and well and thriving. In this model, everything is either an activity-sliced project, or a time-sliced process, to be performed by the most suitable internal or external resources. Already, in Silicon Valley, it is common to see people carrying 2-3 business cards as they match their skills and passions to their work and livelihood in a more flexible way than the elusive “permanent” full-time job.

With the tectonic shifts in technology, demographic, and globalization, companies must transform or else. Design thinking is a good way to bring customer-centricity further into a company and ignite employees’ creativity, going beyond traditional “data driven needs analysis.” What is different this time is the intimate integration of arts and sciences. What remains the same is the sheer difficulty of translating complex user needs to products that are simple but not simplistic, and beautiful yet functional.

Old guard IT vendors will have the upper hand over new Cloud leaders as they all rush to claim IoT leadership. IoT is where Big Data Analytics, Cognitive Computing, and Machine Learning come together for entirely new ways of managing business processes. In its current (emerging) condition, IoT requires a lot more vertical specialization, professional services, and “solution-selling” than cloud computing did when it was in its relative infancy. This gives traditional big (and even small) IT vendors a chance to drive and define the terms of competition, possibly controlling the choice of cloud/software-stack.

Cybercrime is big business and any organization with digital assets is vulnerable to attack. As Cloud and Mobile weaken IT’s control and IoT adds many more points of vulnerability, new strategies are needed. Cloud-native security technologies will include those that redirect traffic through SaaS-based filters, Micro-Zones to permeate security throughout an app, and brand new approaches to data security.

In any value chain, a vendor must decide what value it offers and to whom. With cloud computing, the IT value chain has been disrupted. What used to be a system is now a piece of some cloud somewhere. As the real growth moves to “as-a-service” procurements, there will be fewer but bigger buyers of raw technology who drive hardware design towards scale and commoditization.

The computing industry was down the path of hardware partitioning when virtualization took over, and dynamic reconfiguration of hardware resources took a backseat to manipulating software containers. Infrastructure-as-code, composable infrastructure, converged infrastructure, and rack-optimized designs expand that concept. But container reconfiguration is insufficient at scale, and what is needed is hardware reconfiguration across the data center. That is the next frontier and the technologies to enable it are coming.

Mobile devices are now sufficiently capable that new features may or may not be needed by all users and new OS revs often slow down the device. Even with free upgrades and pre-downloaded OS revs, it is hard to make customers upgrade, while power users jailbreak and get the new features on an old OS. Over time, new capabilities will be provided via more modular dynamically loaded OS services, essentially a new class of apps that are deeply integrated into the OS, to be downloaded on demand.

Nowhere are the demands for scale, flexibility and effectiveness for analytics greater than in social media. This is far beyond Web Analytics. The seven largest “populations” in the world are Google, China, India, Facebook, WhatsApp, WeChat and Baidu, in approximately that order, not to mention Amazon, Apple, Samsung, and several others, plus many important commercial and government applications that rely on social media datasets. Searching through such large datasets with very large numbers of images, social commentary, and complex network relationships stresses the analytical algorithms far beyond anything ever seen before. The pace of algorithmic development for data analytics and for machine intelligence will accelerate, increasingly shaped by social media requirements.

Legacy modernization will get more attention as micro-services, data-flow, and scale-out elasticity become established. But long-term, software engineering is in dire need of the predictability and maintainability that is associated with other engineering disciplines. That need is not going away and may very well require a wholesale new methodology for programming. In the meantime, technologies that help automate software modernization, or enable modular maintainability, will gain traction.

The technical and operational burden on developers has been growing. It is not sustainable. NoSQL databases removed the time-delay and complexity of a data schema at the expense of more complex codes, pulling developers closer to data management and persistence issues. DevOps, on the other hand, has pulled developers closer to the actual deployment and operation of apps with the associated networking, resource allocation, and quality-of-service (QoS) issues. This is another “rubber band” that cannot stretch much more. As cloud adoption continues, development, deployment, and operations will become more synchronized enabling more automation.

The idea of a “memory-only architecture” dates back several decades. New super-large memory systems are finally making it possible to hold entire data sets in memory. Combine this with Flash (and other emerging storage-class memory technologies) and you have the recipe for entirely new ways of achieving near-real-time/straight-through processing.

Small and mid-size public cloud providers will form coalitions around a large market leader to offer enterprise customers the flexibility of cloud without the lock-in and the risk of having a single supplier for a given app. This opens the door for transparently running a single app across multiple public clouds at the same time.

It’s been years since most app developers needed to know what CPU their app runs on, since they work on the higher levels of a tall software stack. Porting code sill requires time and effort but for elastic/stateless cloud apps, the work is to make sure the software stack is there and works as expected. But the emergence of large cloud providers is changing the dynamics. They have the wherewithal to port any system software to any CPU thus assuring a rich software infrastructure. And they need to differentiate and cut costs. We are already seeing GPUs in cloud offerings and FPGAs embedded in CPUs. We will also see the first examples of special cloud regions based on one or more of ARM, OpenPower, MIPS, and SPARC. Large providers can now offer a usable cloud infrastructure using any hardware that is differentiated and economically viable, free from the requirement of binary compatibility.

OrionX is a team of industry analysts, marketing executives, and demand generation experts. With a stellar reputation in Silicon Valley, OrionX is known for its trusted counsel, command of market forces, technical depth, and original content.

There has been much discussion in recent years as to the continued relevance of the High Performance Linpack (HPL) benchmark as a valid measure of the performance of the world’s most capable machines, with some supercomputing sites opting out of the TOP500 completely such as National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign that houses the Cray Blue Waters machine. Blue Waters is claimed to be the fastest supercomputer on a university campus, although without an agreed benchmark it is a little hard to verify such a claim easily.

At a superficial level it appears as though any progress in the upper echelons of the TOP500 may have stalled with little significant change in the TOP10 in recent years and just two new machines added to that elite group in the November 2015 list: Trinity at 8.10 Pflop/s and Hazel Hen at 5.64 Pflop/s, both Cray systems increasing the company’s share of the TOP10 machines to 50%. The TOP500 results are available at http://www.top500.org.

A new benchmark – the High Performance Conjugate Gradients (HPCG) – introduced two years ago was designed to be better aligned with modern supercomputing workloads and includes system features such as high performance interconnects, memory systems, and fine grain cooperative threading. The combined pair of results provided by HPCG and HPL can act as bookends on the performance spectrum of any given system. As of July 2015 HPCG had been used to rank about 40 of the top systems on the TOP500 list.

The November TOP500 List: Important Data for Big Iron

Although being complemented by other benchmarks, the HPL-based TOP500 is still the most significant resource for tracking the pace of super computing technology development historically and for the foreseeable future.

The first petascale machine, ‘Roadrunner’, debuted in June 2008, twelve years after the first terascale machine – ASCI Red in 1996. Until just a few years ago 2018 was the target for hitting the exascale capability level. As 2015 comes to its close the first exascale machine seems much more likely to debut in the first half of the next decade and probably later in that window rather than earlier. So where are we with the TOP500 this November, and what can we expect in the next few lists?

Highlights from the November 2015 TOP500 List on performance:

– The total combined performance of all 500 systems had grown to 420 Pflop/s, compared to 361 Pflop/s last July and 309 Pflop/s a year previously, continuing a noticeable slowdown in growth compared to the previous long-term trend.

– Just over 20% of the systems (a total of 104) use accelerator/co-processor technology, up from 90 in July 2015

– The latest list shows 80 petascale systems worldwide making a total of 16% of the TOP500, up from 50 a year earlier, and significantly more than double the number two years earlier. If this trend continues the entire TOP100 systems are likely to be petascale machines by the end of 2016.

So what do we conclude from this? Certainly that the road to exascale is significantly harder than we may have thought, not just from a technology perspective, but even more importantly from a geo-political and commercial perspective. The aggregate performance level of all of the TOP500 machines has just edged slightly over 40% of the HPL metric for an exascale machine.

Most importantly, increasing the number of petascale-capable resources available to scientists, researchers, and other users up to 20% of the entire list will be a significant milestone. From a useful outcome and transformational perspective it is much more important to support advances in science, research and analysis than to ring the bell with the world’s first exascale system on the TOP500 in 2018, 2023 or 2025.

Architecture

As acknowledged by the introduction of HPCG, HPL and the TOP500 performance benchmark are only one part of the HPC performance equation.

The combination of modern multi-core 64 bit CPUs and math accelerators from Nvidia, Intel and others have addressed many of the issues related to computational performance. The focus on bottlenecks has shifted away from computational strength to data-centric and energy issues, which from a performance perspective influence HPL results but are not explicitly measured in the results. However, from an architectural perspective the TOP500 lists still provide insight into the trends in an extremely useful manner.

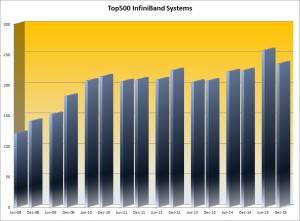

Observations from the June 2015 TOP500 List on system interconnects:

– After two years of strong growth from 2008 to 2010, InfiniBand-based TOP500 systems plateaued at 40% of the TOP500 while compute performance grew aggressively with the focus on hybrid, accelerated systems.

– The uptick in InfiniBand deployments June 2015 to over 50% of the TOP500 list for the first time does not appear to be the start of a trend, with Gigabit Ethernet systems increasing to over a third (36.4%) of the TOP500, up from 29.4%.

The TOP500: The November List and What’s Coming Soon

Although the TOP10 on the list have shown little change for a few years, especially with the planned upgrade to Tianhe 2 blocked by the US government, there are systems under development in China that are expected to challenge the 100 Pflop/s barrier in the next twelve months. From the USA, Coral initiative is expected to significantly exceed the 100 Pflop/s limit – targeting the 100 to 300 Pflop/s with two machines from IBM and one from Cray, but not before the 2017 time frame. None of these systems are expected to deliver more than one third of exascale capability.

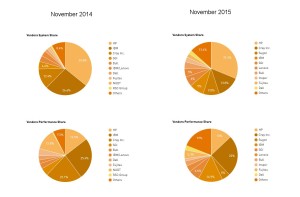

Vendors: A Changing of the Guard

The USA is still the largest consumer of TOP500 systems with 40% of the share by system count, which clearly favors US vendors, but there are definite signs of change on the wind.

Cray is the clear leader in performance with a 24.9 percent share of installed total performance, roughly the same as a year earlier. Each of the other leading US vendors is experiencing declining share with IBM in second place with a 14.9 percent share, down from 26.1 percent last November. Hewlett Packard is third with 12.9 percent, down from 14.5 percent twelve months ago.

These trends are echoed in the system count numbers. HP has the lead in systems and now has 156 (31%), down from 179 systems (36%) twelve months ago. Cray is in second place with 69 systems (13.8%), up from 12.4% last November. IBM now has 45 systems (9%), down from 136 systems (27.2%) a year ago.

On the processor architecture front a total of 445 systems (89%) are now using Intel processors, slightly up from 86.2 percent six months ago, with IBM Power processors now at 26 systems (5.2%), down from 38 systems (7.6%) six month ago.

Geo-Political Considerations

Perhaps the single most interesting insight to come out of the TOP500 has little to do with the benchmark itself, and the geographical distribution data which in itself underscores the importance of the TOP500 lists in tracking trends.

The number of systems in the United States has fallen to the lowest point since the TOP500 list was created in 1993, down to 201 from 231 in July. The European share has fallen to 107 systems compared to 141 on the last list and is now lower than the Asian share, which has risen to 173 systems, up from 107 the previous list and with China nearly tripling the number of systems to 109. In Europe, Germany is the clear leader with 32 systems, followed by France and the UK at 18 systems each. Although the numbers for performance-based distributions vary slightly, the percentage distribution is remarkably similar.

The USA and China divide over 60% of the TOP500 systems between them, with China having half the number and capability of the US-based machines. While there is some discussion about how efficiently the Chinese systems are utilized in comparison to the larger US-based systems, that situation is likely to be temporary and could be addressed by a change in funding models. It is abundantly clear that China is much more serious about increasing funding for HPC systems while US funding levels continue to demonstrate a lack of commitment to maintaining development beyond specific areas such as high energy physics.

TOP500: “I Have Seen The Future and it Isn’t Exascale”

The nature of HPC architectures, workloads and markets are all in flux in the next few years, and an established metric such as the TOP500 is an invaluable tool to monitor the trends. Even though HPL is no longer the only relevant benchmark, the emergence of complementary benchmarks such as HPCG serves to enhance the value of the TOP500, not to diminish it. It will take a while to generate a body of data equivalent to the extensive resource that has been established by the TOP500.

What we can see from the current trends shown in the November 2015 TOP500 list is that although an exascale machine will be built eventually, it almost certainly will not be delivered this decade and possibly not until half way through the next decade. Looking at the current trends it is also very unlikely that the first exascale machine will be built in the USA, and much more likely that it will be built in China.

Over the next few years the TOP500 is likely to remain the best tool we have to monitor geo-political and technology trends in the HPC community. If the adage coined by the Council on Competitiveness, “To out-compute is to out-compete” has any relevance, the TOP500 will likely provide us with a good idea of when the balance of total compute capability will shift from the USA to China.

Peter ffoulkes – Partner at OrionX.net

The OrionX editorial team manages the content on this website.

Last week, in Part 1 of this two-part blog, we looked at trends in Big Data and analytics, and started to touch on the relationship with HPC (High Performance Computing). In this week’s blog we take a look at the usage of Big Data in HPC and what commercial and HPC Big Data environments have in common, as well as their differences.

High Performance Computing has been the breeding ground for many important mainstream computing and IT developments, including:

Big Data has indeed been a reality in many HPC disciplines for decades, including:

All of these fields and others generate massive amounts of data, which must be cleaned, calibrated, reduced and analyzed in great depth in order to extract knowledge. This might be a new genetic sequence, the identification of a new particle such as the Higgs Boson, the location and characteristics of an oil reservoir, or a more accurate weather forecast. And naturally the data volumes and velocity are growing continually as scientific and engineering instrumentation becomes more advanced.

A recent article, published in the July 2015 issue of the Communications of the ACM, is titled “Exascale computing and Big Data”. Authors Daniel A. Reed and Jack Dongarra note that “scientific discovery and engineering innovation requires unifying traditionally separated high-performance computing and big data analytics”.

(n.b. Exascale is 1000 x Petascale, which in turn is 1000 x Terascale. HPC and Big Data are already well into the Petascale era. Europe, Japan, China and the U.S. have all announced Exascale HPC initiatives spanning the next several years.)

What’s in common between Big Data environments and HPC environments? Both are characterized by racks and racks of commodity x86 systems configured as compute clusters. Both environments have compute system management challenges in terms of power, cooling, reliability and administration, scaling to as many as hundreds of thousands of cores and many Petabytes of data. Both are characterized by large amounts of local node storage, increasing use of flash memory for fast data access and high-bandwidth switches between compute nodes. And both are characterized by use of Linux OS operating systems or flavors of Unix. Open source software is generally favored up through the middleware level.

What’s different? Big Data and analytics uses VMs above the OS, SANs as well as local storage, the Hadoop (parallel) file system, key-value store methods, and a different middleware environment including Map-Reduce, Hive and the like. Higher-level languages (R, Python, Pig Latin) are preferred for development purposes.

HPC uses C, C++, and Fortran traditional compiler development environments, numerical and parallel libraries, batch schedulers and the Lustre parallel file system. And in some cases HPC systems employ accelerator chips such as Nvidia GPUs or Intel Xeon Phi processors, to enhance floating point performance. (Prediction: we’ll start seeing more and more of these used in Big Data analytics as well – http://www.nvidia.com/object/data-science-analytics-database.html).

But in both cases the pipeline is essentially:

Data acquisition -> Data processing -> Model / Simulation -> Analytics -> Results

The analytics must be based on and informed by a model that is attempting to capture the essence of the phenomena being measured and analyzed. There is always a model — it may be simple or complex; it may be implicit or explicit.

Human behavior is highly complex, and every user, every customer, every patient, is unique. As applications become more complex in search of higher accuracy and greater insight, and as compute clusters and data management capabilities become more powerful, the models or assumptions behind the analytics will in turn become more complex and more capable. This will result in more predictive and prescriptive power.

Our general conclusion is that while there are some distinct differences between Big Data and HPC, there are significant commonalities. Big Data is more the province of social sciences and HPC more the province of physical sciences and engineering, but they overlap, and especially so when it comes to the life sciences. Is bioinformatics HPC or Big Data? Yes, both. How about the analysis of clinical trials for new pharmaceuticals? Arguably, both again.

So cross-fertilization and areas of convergence will continue, while each of Big Data and HPC continue to develop new methods appropriate to their specific disciplines. And many of these new methods will crossover to the other area when appropriate.

The National Science Foundation believes in the convergence of Big Data and HPC and is putting $2.4 million of their money into this at the University of Michigan, in support of various applications including climate science, cardiovascular disease and dark matter and dark energy. See:

What are your thoughts on the convergence (or not) of Big Data and HPC?

Stephen Perrenod has lived and worked in Asia, the US, and Europe and possesses business experience across all major geographies in the Asia-Pacific region. He specializes in corporate strategy for market expansion, and cryptocurrency/blockchain on a deep foundation of high performance computing (HPC), cloud computing and big data. He is a prolific blogger and author of a book on cosmology.

Data volumes, velocity, and variety are increasing as consumer devices become more powerful. PCs, smart phones and tablets are the instrumentation, along with the business applications that continually capture user input, usage patterns and transactions. As devices become more powerful each year (each few months!) the generated volumes of data and the speed of data flow both increase concomitantly. And the variety of available applications and usage models for consumer devices is rapidly increasing as well.

Holding more and more data in-memory, via in-memory databases and in-memory computing, is becoming increasingly important in Big Data and data management more broadly. HPC has always required very large memories due to both large data volumes and the complexity of the simulation models.

As is often pointed out in the Big Data field, it is the analytics that matters. Collecting, classifying and sorting data is a necessary prerequisite. But until a proper analysis is done, one has only expended time, energy and money. Analytics is where the value extraction happens, and that must justify the collection effort.

Applications for Big Data include customer retention, fraud detection, cross-selling, direct marketing, portfolio management, risk management, underwriting, decision support, and algorithmic trading. Industries deploying Big Data applications include telecommunications, retail, finance, insurance, health care, and the pharmaceutical industry.

There are a wide variety of statistical methods and techniques employed in the analytical phase. These can include higher-level AI or machine learning techniques e.g. neural networks, support vector machines, radial basis functions, and nearest neighbor methods. These imply a significant requirement for a large number of floating point operations, which is characteristic of most of HPC.

For one view on this, here is a recent report on InsideHPC.com and video on “Why HPC is so important to AI”

If one has the right back-end applications and systems then it is possible to keep up with the growth in data and perform the deep analytics necessary to extract new insights about customers, their wants and desires, and their behavior and buying patterns. These back-end systems increasingly need to be of the scale of HPC systems in order to stay on top of all of the ever more rapidly incoming data, and to meet the requirement to extract maximum value.

In Part 2 of this blog series, we’ll look at how Big Data and HPC environments differ, and at what they have in common.

Stephen Perrenod has lived and worked in Asia, the US, and Europe and possesses business experience across all major geographies in the Asia-Pacific region. He specializes in corporate strategy for market expansion, and cryptocurrency/blockchain on a deep foundation of high performance computing (HPC), cloud computing and big data. He is a prolific blogger and author of a book on cosmology.

The TV series Mad Men was a smashing success, and it offered many teachable moments in marketing.