Deep Learning & Pieces of Eight

What does a Spanish silver dollar have to do with Deep Learning? It’s a question of standards and required precision.The widely used Spanish coin was introduced at the end of the 16th century as Spain exploited the vast riches of New World silver. It was denominated as 8 Reales. Because of its standard characteristics it served as a global currency.

Ferdinand VI Silver peso (8 Reales, or Spanish silver dollar)

The American colonies in the 18th century suffered from a shortage of British coinage and used the Spanish dollar widely; it entered circulation through trade with the West Indies. The Spanish dollar was also known as “pieces of eight” and in fact was often cut into pieces known as “bits” with 8 bits comprising a dollar. This is where the expression “two bits” referring to a quarter dollar comes from. The original US dollar coin was essentially based on the Spanish dollar.

For Deep Learning, the question arises – what is the requisite precision for robust performance of a multilayer neural network. Most neural net applications are implemented with 32 bit floating point precision, but is this really necessary?

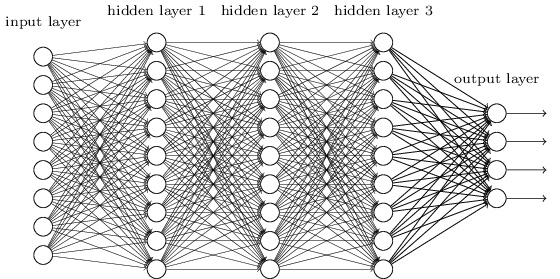

5 layer network, http://neuralnetworksanddeeplearning.com/chap6.html, CC3.0-BY-NC

It seems that many neural net applications could be successfully deployed with integer or fixed point arithmetic rather than floating point, and with only 8 to 16 bits of precision. Training may require higher precision, but not necessarily.

A team of researchers from IBM’s Watson Labs and Almaden Research Center find that:

“deep networks can be trained using only 16-bit wide fixed-point number representation when using stochastic rounding, and incur little to no degradation in the classification accuracy”.

In his blog entry “Why are Eight Bits Enough for Deep Neural Networks”, Pete Warden states that:

“As long as you accumulate to 32 bits when you’re doing the long dot products that are the heart of the fully-connected and convolution operations (and that take up the vast majority of the time) you don’t need float though, you can keep all your inputs and output as eight bit. I’ve even seen evidence that you can drop a bit or two below eight without too much loss! The pooling layers are fine at eight bits too, I’ve generally seen the bias addition and activation functions (other than the trivial relu) done at higher precision, but 16 bits seems fine even for those.”

He goes on to say that “training can also be done at low precision. Knowing that you’re aiming at a lower-precision deployment can make life easier too, even if you train in float, since you can do things like place limits on the ranges of the activation layers.”

Moussa and co-researchers have found 12 times greater speed using a fixed-point representation when compared to floating point on the same Xilinx FPGA hardware. If one can relax the precision of neural nets when deployed and/or during training, then higher performance may be realizable at lower cost and with a lower memory footprint and lower power consumption. The use of heterogeneous architectures employing GPUs, FPGAs or other special purpose hardware becomes even more feasible.

For example nVidia has just announced a system they call the “World’s first Deep Learning Supercomputer in a Box”. nVidia’s GIE inference engine software supports 16 bit floating point representations. Microsoft is pursuing the FPGA route, claiming that FGPA designs provide competitive performance for substantially less power.

This is such an interesting area, with manycore chips such as Intel’s Xeon Phi, nVidia’s GPUs and various FPGAs jockeying for position in the very hot Deep Learning marketplace.

OrionX will continue to monitor AI and Deep Learning developments closely.

References:

Gupta, S., Agrawal, A., Gopalakrishnan, K., Narayanan, P. 2015,https://arxiv.org/pdf/1502.02551.pdf “Deep Learning with Limited Numerical Precision”

Jin, L. et al. 2014, “Training Large Scale Deep Neural Networks on the Intel Xeon Phi Many-Core Processor”, proceedings, Parallel & Distributed Processing Symposium Workshops, May 2014

Moussa, M., Areibi, S., and Nichols, K. 2006 “Arithmetic Precision for Implementing BP Networks on FPGA: A case study”, Chapter 2 in FPGA Implementations of Neural Networks, ed. Omondi, A. and Rajapakse, J.

Warden, P. 2015 https://petewarden.com/2015/05/23/why-are-eight-bits-enough-for-deep-neural-networks/

Stephen Perrenod has lived and worked in Asia, the US, and Europe and possesses business experience across all major geographies in the Asia-Pacific region. He specializes in corporate strategy for market expansion, and cryptocurrency/blockchain on a deep foundation of high performance computing (HPC), cloud computing and big data. He is a prolific blogger and author of a book on cosmology.